SoftMax回归 1、聚类和分类 通过sklearn库提供的聚类算法生成K类数据,以这些数据做为数据集训练神经网络,利用softmax层和交叉熵损失函数对数据进行分类。聚类参数要求K>3,数据样本不少于1000,其他参数参考课件。对聚类后的数据按9:1的原则划分训练集和测试集,利用在训练集上训练得到的模型对测试集上的数据进行验证,要求模型的准确率不低于99%。

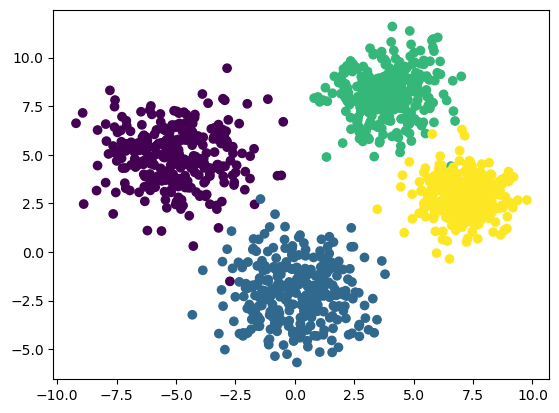

完成程度 :使用sklearn.datasets中的make_blobs函数生成1200个样本数据,样本种类为4,样本中心分别为[-5, 5], [0, -2], [4, 8], [7, 3],方差分别为[1.5,1.5,1.2,1],每样样本数为300个,对样本数据进行划分,按照9训练集:1测试集的比例进行划分,构建网络,使用交叉熵损失函数,使用训练集对模型进行训练,在测试集上完成测试验证。

2、鸢尾花分类 Iris数据集包含150个样本,对应数据集的每行数据。每行数据包含每个样本的四个特征和样本的类别信息,iris数据集是用来给鸢尾花做分类的数据集,每个样本包含了花萼长度、花萼宽度、花瓣长度、花瓣宽度四个特征,请用神经网络训练一个分类器,分类器可以通过样本的四个特征来判断样本属于山鸢尾花、变色鸢尾还是维吉尼亚鸢尾。数据集文件iris.csv。要求模型的准确率不低于99%。

完成程度 :加载鸢尾花数据集iris.csv,查看样本数据,对数据进行标准化处理,将使用到的数据划分为训练集和测试集,搭建网络模型,使用交叉熵损失函数,使用训练集对模型进行训练,在测试集上完成测试验证。

聚类和分类 1 2 3 4 5 6 7 8 9 10 11 12 import torchimport torch.nn as nnfrom torch.utils.data import DataLoader, TensorDataset, Datasetimport numpy as npimport pandas as pdfrom matplotlib import pyplot as pltimport torch.nn.functional as Ffrom sklearn.datasets import make_blobs1200 , n_features=2 , centers=[[-5 , 5 ], [0 , -2 ], [4 , 8 ], [7 , 3 ]], cluster_std=[1.5 ,1.5 ,1.2 ,1 ])0 ], data[:, 1 ], c=target, marker='o' )

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 type (torch.FloatTensor)type (torch.LongTensor)1080 ]1080 ]1080 :]1080 :]32 ,shuffle=True )16 ,shuffle=True )

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 class model (nn.Module):def __init__ (self ):super ().__init__()2 , 5 )5 , 4 )def forward (self, x ):return x0.01 )

1 2 3 4 5 6 7 import torchimport torch.nn as nnfrom torch.utils.data import DataLoader, TensorDataset, Datasetimport numpy as npimport pandas as pdimport torch.nn.functional as Fimport torch.optim as optim

1 2 3 4 5 6 7 8 9 10 11 class model (nn.Module):def __init__ (self ):super ().__init__()2 , 4 )4 , 1 )def forward (self, x ):return x

1 2 3 4 net = model()

1 2 3 4 5 6 7 8 9 10 11 12 13 for epoch in range (1000 ):for i, data in enumerate (train_loader):if (epoch%100 ==0 ):print (loss)

tensor(1.2986, grad_fn=<NllLossBackward0>)

tensor(0.0348, grad_fn=<NllLossBackward0>)

tensor(0.0084, grad_fn=<NllLossBackward0>)

tensor(0.0275, grad_fn=<NllLossBackward0>)

tensor(0.0479, grad_fn=<NllLossBackward0>)

tensor(0.0559, grad_fn=<NllLossBackward0>)

tensor(0.0055, grad_fn=<NllLossBackward0>)

tensor(0.0015, grad_fn=<NllLossBackward0>)

tensor(0.0134, grad_fn=<NllLossBackward0>)

tensor(0.0884, grad_fn=<NllLossBackward0>)

1 2 3 4 def rightness (predictions, labels ):max (predictions.data, 1 )[1 ]sum ()return rights, len (labels)

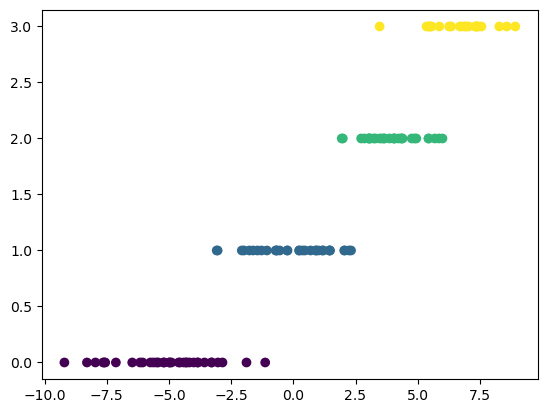

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 print (test_x.shape, test_y.shape, test_x[2 ], target)0 ], test_y, c=target[1080 :], marker='o' )0 0 for i, data in enumerate (test_loader):0 ]1 ]print (y)print (torch.max (pred.data, 1 )[1 ], '\n' )print (rights, length, rights/length)

torch.Size([120, 2]) torch.Size([120]) tensor([4.7586, 6.5412]) tensor([1, 0, 1, ..., 0, 0, 1])

tensor([0, 0, 2, 1, 1, 0, 1, 0, 3, 0, 1, 3, 3, 1, 1, 1])

tensor([0, 0, 2, 1, 1, 0, 1, 0, 3, 0, 1, 3, 3, 1, 1, 1])

tensor([0, 1, 2, 1, 2, 0, 0, 1, 2, 2, 3, 2, 1, 3, 2, 2])

tensor([0, 1, 2, 1, 2, 0, 0, 1, 2, 2, 3, 2, 0, 3, 2, 2])

tensor([0, 0, 3, 0, 3, 0, 2, 0, 2, 1, 3, 0, 3, 2, 0, 0])

tensor([0, 0, 3, 0, 3, 0, 2, 0, 2, 1, 3, 0, 3, 2, 0, 0])

tensor([3, 3, 0, 0, 2, 0, 0, 3, 0, 3, 3, 1, 2, 2, 3, 3])

tensor([3, 3, 0, 0, 2, 0, 0, 3, 0, 3, 3, 1, 2, 2, 3, 3])

tensor([0, 0, 1, 0, 1, 0, 3, 2, 1, 1, 1, 2, 2, 2, 0, 0])

tensor([0, 0, 1, 0, 1, 0, 3, 2, 1, 1, 1, 2, 2, 2, 0, 0])

tensor([2, 1, 1, 0, 3, 2, 2, 1, 1, 1, 1, 0, 2, 2, 0, 2])

tensor([2, 1, 1, 0, 3, 2, 2, 1, 1, 1, 1, 0, 2, 2, 0, 2])

tensor([0, 1, 3, 2, 2, 2, 3, 1, 1, 0, 3, 2, 0, 3, 1, 0])

tensor([0, 1, 3, 2, 2, 2, 3, 1, 1, 0, 3, 2, 0, 3, 1, 0])

tensor([2, 1, 2, 1, 1, 1, 0, 3])

tensor([2, 1, 2, 1, 1, 1, 0, 3])

tensor(119) 120 tensor(0.9917)

鸢尾花分类

通过花萼长度,花萼宽度,花瓣长度,花瓣宽度4个特征

使用神经网络训练一个分类器对数据集iris.csv进行分类

1 2 3 4 5 6 7 8 9 import torchimport torch.nn as nnimport torch.nn.functional as Fimport numpy as npimport pandas as pdfrom torch.utils.data import Dataset, DataLoader, TensorDatasetfrom sklearn.utils import shuffleimport matplotlib.pyplot as plt

1 2 3 4 5 6 7 8 9 10 11 'iris.csv' )for i in range (len (data)):if data.loc[i, 'Species' ] == 'setosa' :'Species' ] = 0 if data.loc[i, 'Species' ] == 'versicolor' :'Species' ] = 1 if data.loc[i, 'Species' ] == 'virginica' :'Species' ] = 2

</style>

Unnamed: 0

Sepal.Length

Sepal.Width

Petal.Length

Petal.Width

Species

0

1

5.1

3.5

1.4

0.2

0

1

2

4.9

3.0

1.4

0.2

0

2

3

4.7

3.2

1.3

0.2

0

3

4

4.6

3.1

1.5

0.2

0

4

5

5.0

3.6

1.4

0.2

0

</div>

1 2 data = data.drop('Unnamed: 0' , axis=1 )

Sepal.Length

Sepal.Width

Petal.Length

Petal.Width

Species

0

5.1

3.5

1.4

0.2

0

1

4.9

3.0

1.4

0.2

0

2

4.7

3.2

1.3

0.2

0

3

4.6

3.1

1.5

0.2

0

4

5.0

3.6

1.4

0.2

0

1 2 3 4 data = shuffle(data)print (data.head())range (len (data))

Sepal.Length Sepal.Width Petal.Length Petal.Width Species

62 6.0 2.2 4.0 1.0 1

122 7.7 2.8 6.7 2.0 2

130 7.4 2.8 6.1 1.9 2

125 7.2 3.2 6.0 1.8 2

112 6.8 3.0 5.5 2.1 2

Sepal.Length

Sepal.Width

Petal.Length

Petal.Width

Species

0

6.0

2.2

4.0

1.0

1

1

7.7

2.8

6.7

2.0

2

2

7.4

2.8

6.1

1.9

2

3

7.2

3.2

6.0

1.8

2

4

6.8

3.0

5.5

2.1

2

</div>

1 2 3 4 5 6 7 'Sepal.Length' , 'Sepal.Width' , 'Petal.Length' , 'Petal.Width' ]for i in col_titles:

Sepal.Length

Sepal.Width

Petal.Length

Petal.Width

Species

0

0.189196

-1.966964

0.137087

-0.261511

1

1

2.242172

-0.590395

1.666574

1.050416

2

2

1.879882

-0.590395

1.326688

0.919223

2

3

1.638355

0.327318

1.270040

0.788031

2

4

1.155302

-0.131539

0.986802

1.181609

2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 32 ]'Species' ], axis=1 ).values'Species' ].values.astype(int )type (torch.FloatTensor)type (torch.LongTensor)32 :]'Species' ], axis=1 ).values'Species' ].values.astype(int )type (torch.FloatTensor)type (torch.LongTensor)16 , shuffle=True )16 , shuffle=True )

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 class model (nn.Module):def __init__ (self ):super ().__init__()4 , 5 )5 , 3 )def forward (self, x ):return x0.05 )

1 2 3 4 5 6 7 8 9 10 11 12 13 for epoch in range (10000 ):for i, data in enumerate (train_loader):if epoch%1000 ==0 :print ('第' , epoch, '个epoch,损失值为:' , loss)

第 0 个epoch,损失值为: tensor(1.0210, grad_fn=<NllLossBackward0>)

第 1000 个epoch,损失值为: tensor(0.0007, grad_fn=<NllLossBackward0>)

第 2000 个epoch,损失值为: tensor(0.0010, grad_fn=<NllLossBackward0>)

第 3000 个epoch,损失值为: tensor(0.0004, grad_fn=<NllLossBackward0>)

第 4000 个epoch,损失值为: tensor(0.0008, grad_fn=<NllLossBackward0>)

第 5000 个epoch,损失值为: tensor(0.0007, grad_fn=<NllLossBackward0>)

第 6000 个epoch,损失值为: tensor(0.0010, grad_fn=<NllLossBackward0>)

第 7000 个epoch,损失值为: tensor(0.0001, grad_fn=<NllLossBackward0>)

第 8000 个epoch,损失值为: tensor(0.0006, grad_fn=<NllLossBackward0>)

第 9000 个epoch,损失值为: tensor(0.0001, grad_fn=<NllLossBackward0>)

1 2 3 4 def rightness (predictions, labels ):max (predictions.data, 1 )[1 ]sum ()return rights, len (labels)

1 2 3 4 5 6 7 8 9 10 11 12 13 0 0 for i, data in enumerate (test_loader):0 ]1 ]print (y)print (torch.max (pred.data, 1 )[1 ], '\n' )print (rights, length, rights/length)

tensor([2, 0, 1, 2, 1, 2, 1, 2, 2, 0, 1, 1, 0, 2, 0, 0])

tensor([2, 0, 1, 2, 2, 2, 1, 2, 2, 0, 1, 1, 0, 2, 0, 0])

tensor([1, 0, 1, 1, 0, 1, 0, 1, 0, 1, 1, 2, 1, 1, 1, 2])

tensor([2, 0, 1, 1, 0, 1, 0, 1, 0, 2, 1, 2, 1, 1, 1, 2])

tensor(29) 32 tensor(0.9062)